Testing Methodology for Big Data Project

Exponential growth in ‘data’ generation will push organizations to integrate Big Data in their strategy as de facto technology to derive value out of data. For the same reason, 2018 will be the year of rapid adoption of Big Data. Everyone wants to catch that bus! The process to test Big Data applications before deployment is still not fully developed. This very fact can make it a bumpy ride!

To overcome the lack of documentation and processes for testing Big data applications, I tried to define a testing process based on my experience. I have had the good fortune to work on some of the unique Big data projects at Ellicium. For easy understanding, I am taking an example of a testing process I created while working on an IOT-based project. It was for one of the biggest telecom companies in India.

Our client was struggling to find a tool that could do a detailed analysis of the huge volume of streaming data. Most of this data gets generated through mobile phone usage by customers in the network. Our client aimed to use this analysis to provide intelligence to the marketing department to up the marketing game and improve customer’s experience of using the network.

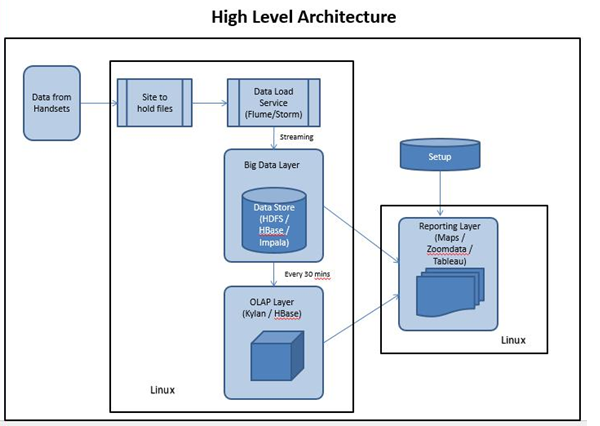

Following is the high-level architecture of that Application

As you can see in the architecture, there were a variety of independent processes. Hence, it was important to have a separate methodology for testing each process. So, let’s see how I did it!

First and foremost, I categorized processes based on their behavior. Check the image below to know the clusters that we created.

Based on this categorization and sequence, I created a testing flow. I have discussed the exact steps that we followed.

Step 1: Data Staging Validation

When: Before data lands on Hadoop

Where: Local File System

How:

- Compare the number of files – We used shell scripts to compare the count of files in source and destination folders using timestamps. The timestamp can be extracted from filenames as files were named after creation time.

- Validating the number of records – We used shell scripts to validate the number of records before and after processing.

- Identify outdated data – We used shell scripts to check if any outdated data comes from sources. This can happen when the user has switched off his internet connection for some time, and once he switches on the internet, it will start sending previously generated data to the data server. In such scenarios, we identify and move such files out of the current process so they don’t unnecessarily consume processing time and space.

Step 2: Data Processing Validation

When: After processing or aggregating data stored in Hadoop

Where: HBase/HDFS

How:

- I fetched a few samples from aggregated data stored in an aggregated table using a hive query and compared them with raw data stored in the raw data table.

- I had written a shell script that counts a number of records in source files for a certain period with data stored in HBase using a hive query written in the same shell script.

- There was a step where we chopped CSV files for the location network vertically based on the row key, as flume can take only 30 columns at a time, whereas we had more than 55. This makes data testing crucial as it is not only about horizontal data testing but about vertical, too. I wrote a hive script that periodically checks before every aggregation that there are no NULL columns in HBase.

Step 3: Reports Validation

- Data Validation: I used to test data in the PHP-based report for different combinations of time. The crux of the data testing here is that if I don’t find data on the report for a specific period, I would have to reverse engineer the whole process until I see at which stage the data was missed. The data can be ignored when Impala fetches data for a report, during hive aggregation, while flume loads data, or when data pre-processing is done using scripting. This is strictly manual and requires access to the whole system and patience.

Though this solved the problem, there are some challenges while testing big data-based applications.

Big Data Testing Challenges

Expensive to create a test Environment

Creating a test environment for Big Data projects is a bit expensive. With the due risk of data handling, it is advised to do something other than do testing in the development environment. Hence, it becomes inevitable to have separate machines for testing. Based on the volume of data, the cost may vary.

Challenging to obtain the right/meaningful testing data

This one can be a real show-stopper. At the same time, it is vital to get it right. With data variety and volume, getting meaningful testing data becomes more work. To solve this, find a sample of data covering all negative test cases. It can be a bit of a time-consuming task. But it needs to be done!

I hope this article helps those looking for a testing methodology for Big Data applications.

Have you followed some other process? I would love to hear from you. Do share your story with me here: [email protected]