5 Efficient Ways to Extract Text from Articles

Most of you might be wondering what the above text means. It means –Natural language processing is unique in Chinese, and yes, we are not mortified by the cluelessness in this matter. The reason is that these days we have plenty of translation tools available that are easily accessible and trouble-free.

But what happens when a machine is asked to accomplish the same thing. How will a computer understand the language we humans speak? Well, this certainly seemed impossible many decades ago. However, English Mathematician Sir Alan Turing had faith in the ability of a computer to understand and interact with humans with the human language as a medium. This is possible today because of Natural Language Processing.

Natural language processing (NLP) is an up-surging field in computer science and artificial intelligence and is becoming interesting daily. The main intention of NLP is to give a computer the ability to understand texts and words as humans understand. So accordingly, gathering textual data becomes the key ingredient of it.

Although “Quality matters more than quantity,” as stated by Lucius Annaeus Seneca, quality and quantity are more important and vital in data science. Hence, collecting huge amounts of textual data is necessary for Machine learning and NLP. This data can be collected through various resources. Out of which news articles and blogs available on the Internet are quite edacious.

In this Blog, I am going to explain 5 easy and efficient methods by which you can extract text from news articles easily with just a few lines of code.

(Note : All the methods explained below are based on Python programming language)

Newspaper3k

It is an amazing library that allows you to extract and parse news articles. It not only extracts the text of an article but also gives useful insights without any additional effort.

You only have to get the URL of the article you want the text extracted.

From newspaper import Article

#url of any article

article_url =

“https://www.msn.com/en-in/news/science/pfizer-expects-to-make-nearly —as-much-revenue-just-from-covid-19-vaccines-in-2021-as-it-earned-in all-of-2020/ar-AAQeTLq”

def newspaper_text_extraction(article_url):

article = Article(article_url)

article.download()

article.parse()

return article. title,article.text

#You can check the output text by calling the function

Goose3

Goose is also a python module used for text extraction. It was originally created in Java and has recently been rewritten in Python. Its work is quite similar to that of the newspaper module.

from goose3 import Goose

def goose_text_extraction(article_url):

g = Goose()

article = g.extract(article_url)

return article.cleaned_text, article.title

Html2text

In this method, the html2text library, along with Python’s requests module, is used. The HTML web page content is taken from the article URL using requests and parsed with the HTML2Text function. The html2text library is not peculiarly designed for article text extraction. Hence, an additional port is required in this method to clean the extracted text. This can be done easily by creating a function separately for cleaning the text.

import requests

import html2text

def extract_html2text(article_url):

response = requests.get(article_url)

html_str = response.content

h = html2text.HTML2Text()

h.ignore_links = True

h.ignore_images = True

text = h.handle(str(html_str))

return text

Readability – lxml and BeautifulSoup

This method is constructed by combining the Readability and Beautifulsoup modules in Python.

The Readability module pulls out the main body and text and cleans it up when given an HTML document.

We parse the document summary of the article with BeautifulSoup object And scrape all the paragraph tags accordingly.

def extract_text(html_str):

doc = Document(html_str)

soup = BeautifulSoup(doc.summary(), ‘lxml’)

s = [p.getText() for p in soup.find_all(‘p’)]

return doc.title(, ‘\n’.join(s)

Media-specific extraction

Creating a scraping function designed specifically for any news media from which you want the text extracted is an exact option. Each media company publishes its articles with a standard HTML structure, the same for all the pieces.

You can create a media-specific extraction function by using the Requests and Beautifulsoup libraries and the article’s URL as the input parameter.

def msn_articles(html_str):

soup = BeautifulSoup(html_str, ‘lxml’)

text_content = soup.find(‘header’,

attrs={‘class’: ‘collection-headline-flex’}).h1

r1 = text_content.text.strip()

text_content = soup.find(‘article’).find_all(‘pm)

r2 = ”

for i in text_content:

r2 += i.text.strip()

title = r1

body = r2

return title, body

Below is the text extracted from the following article –

(‘Pfizer expects to make nearly as much revenue just from COVID-19 vaccines in 2021 as it earned in all of 2020’,‘(c) Pfizer expects to make nearly as much revenue just from COVID-19 vaccines in 2021 as it earned in all of 2020\n\nPfizer expects to make nearly as much money from vaccines in 2021 as it earned in total in 2020.\n\nThe drugmaker expects vaccine sales to rake in $36 billion this year.\n\nPfizer expects to make nearly as much revenue just from COVID-19 vaccine sales alone in 2021 as it earned in all of 2020, the drugmaker said on Tuesday.\n\n

The company said it expects revenue from the vaccines to be $36 billion by the end of 2021, up from an estimated $33.5 billion the company had predicted earlier in the year.\n\nIn 2020, it brought in $41.9 billion in revenue.\n\nPfizer is the first and only COVID-19 vaccine approved in the US, and the Food and Drug Administration authorized Pfizer\’s vaccine for kids ages 5 to 11 last week, making it the first to be available to younger children.\n\nPfizer reached agreements with governments worldwide in 2020 and 2021 to distribute its vaccine.

The company said Tuesday that it expects to deliver 2.3 billion doses of the shot globally by the end of the year.\n\nAccording to the US Centers for Disease Control and Prevention, over 247 million doses of the Pfizer vaccine have been administered since the pandemic began.\n\nBut nearly a year since the launch of large-scale vaccination rollouts, pharmaceutical companies continue to face allegations of corporate greed.\n\n

Mistrust of Big Pharma may be influencing vaccine skepticism among Americans, experts told Insider\’s Allana Akhtar.\n\nPfizer has faced backlash from activists – including Amnesty International – who accuse the company of putting its bottom line over people\’s wellbeing.\n\nIn a statement Tuesday, Pfizer CEO Albert Bourla said the company will donate 1 billion doses of its COVID-19 vaccine to the US government for a “not-for-profit price” so they can be donated to developing nations around the world.’)

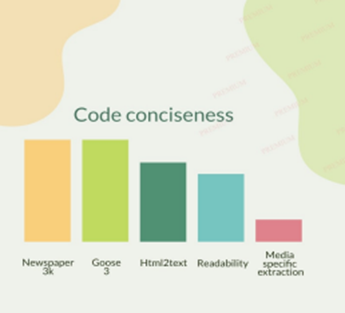

I will share my analysis of these methods based on my experience. The first four methods work for multiple media articles, out of which Readability is useful almost 90 percent of the time and is a coherent approach. A bit of extra effort is required for Html2text, and the output is comparatively less precise than the others. Newspaper3k and Goose3 are pretty easy to use and time-saving.

If the extracted text needs to be ideal and flawless, then media-specific extraction is the one you should go with. But the main drawback of this method is that it needs to be designed separately for each media, which is a tedious job.

A small future scope for this particular method could be creating a single parent function with the optimum functionality and passing all the attributes and their values for different media in a JSON document. Slicing the domain name from the URL gives a unique string that can be used as a key to store the attributes and their values in the JSON document.

Below are some graphs that will help understand the evaluation of all the methods.

So now you can extract the text from any article easily and use it for different purposes per your requirements. Try using other methods for scraping as a single method won’t be adequate for all the pieces on the Internet.

If you enjoyed this article, share it with your friends and colleagues! And do contact me if you have any questions or suggestions you would like to discuss.

~ Vikrant Kulkarni

Senior software Engineer